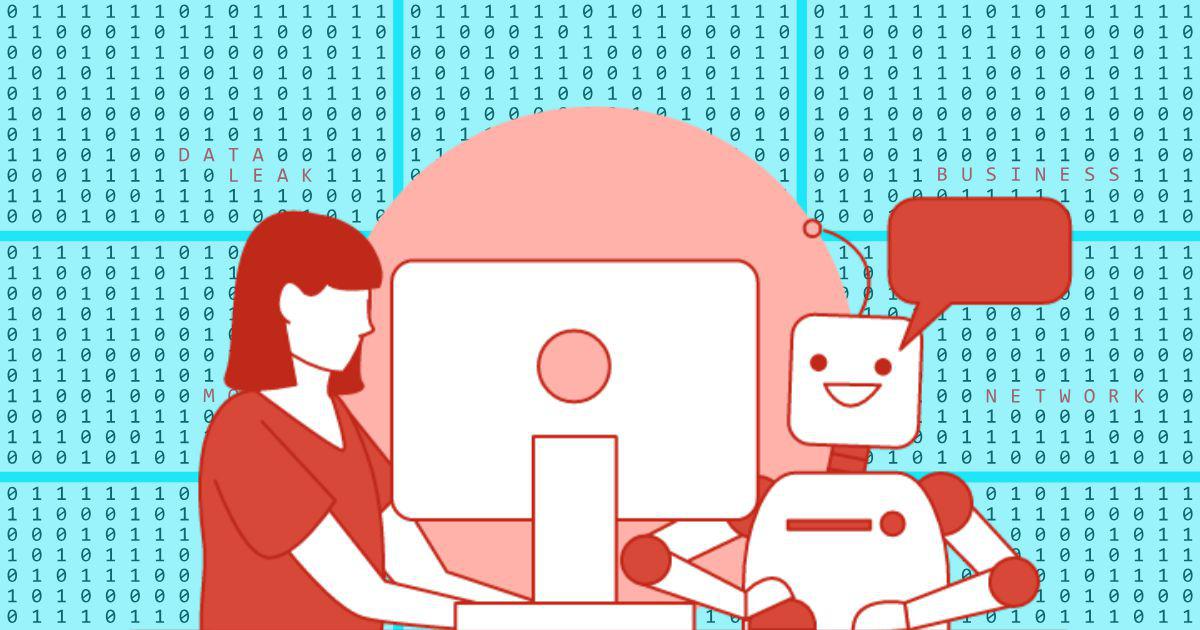

As young Indians turn to AI ‘therapists’, how confidential is their data?

Join our WhatsApp Community to receive travel deals, free stays, and special offers!

- Join Now -

Join our WhatsApp Community to receive travel deals, free stays, and special offers!

- Join Now -

This is the second of a two-part series. Read the first here.

Imagine a stranger getting hold of a mental health therapist’s private notes – and then selling that information to deliver tailored advertisements to their clients.

That’s practically what many mental healthcare apps might be doing.

Young Indians are increasingly turning to apps and artificial intelligence-driven tools to address their mental health challenges – but have limited awareness about how these digital tools process user data.

In January, the Centre for Internet and Society published a study based on 45 mental health apps – 28 from India and 17 from abroad – and found that 80% gathered user health data that they used for advertising and shared with third-party service providers.

An overwhelming number of these apps, 87%, shared the data with law enforcement and regulatory bodies.

The first article in this series had reported that some of these apps are especially popular with young Indian users, who rely on them for quick and easy access to therapy and mental healthcare support.

Users had also told Scroll that they turned to AI-driven technology, such as ChatGPT, to discuss their feelings and get advice, however limited this may be compared to interacting with a human therapist. But they were not especially worried about data misuse. Keshav*,...

Read more

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0